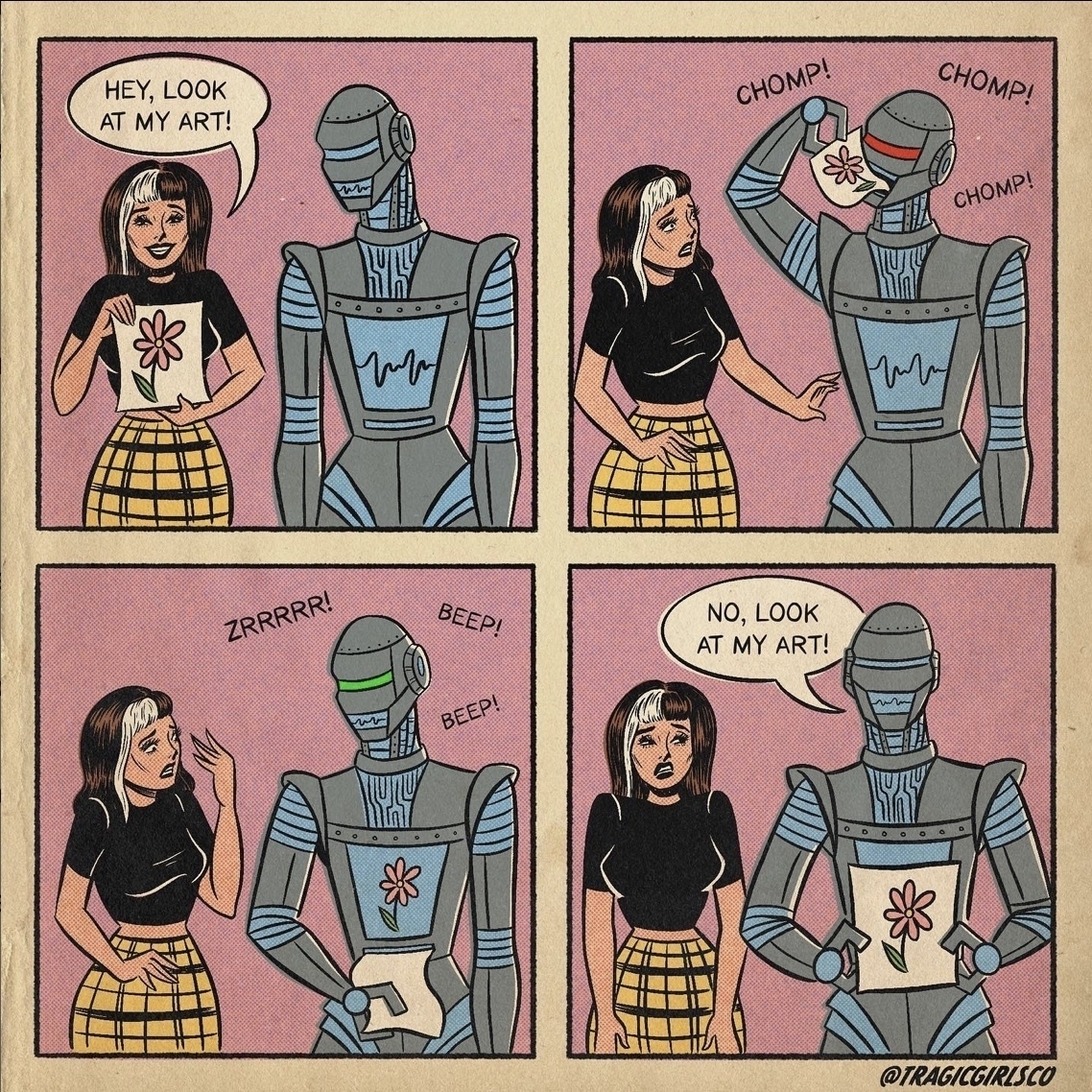

Source: Katie Mansfield / @ tragicgirlsco.

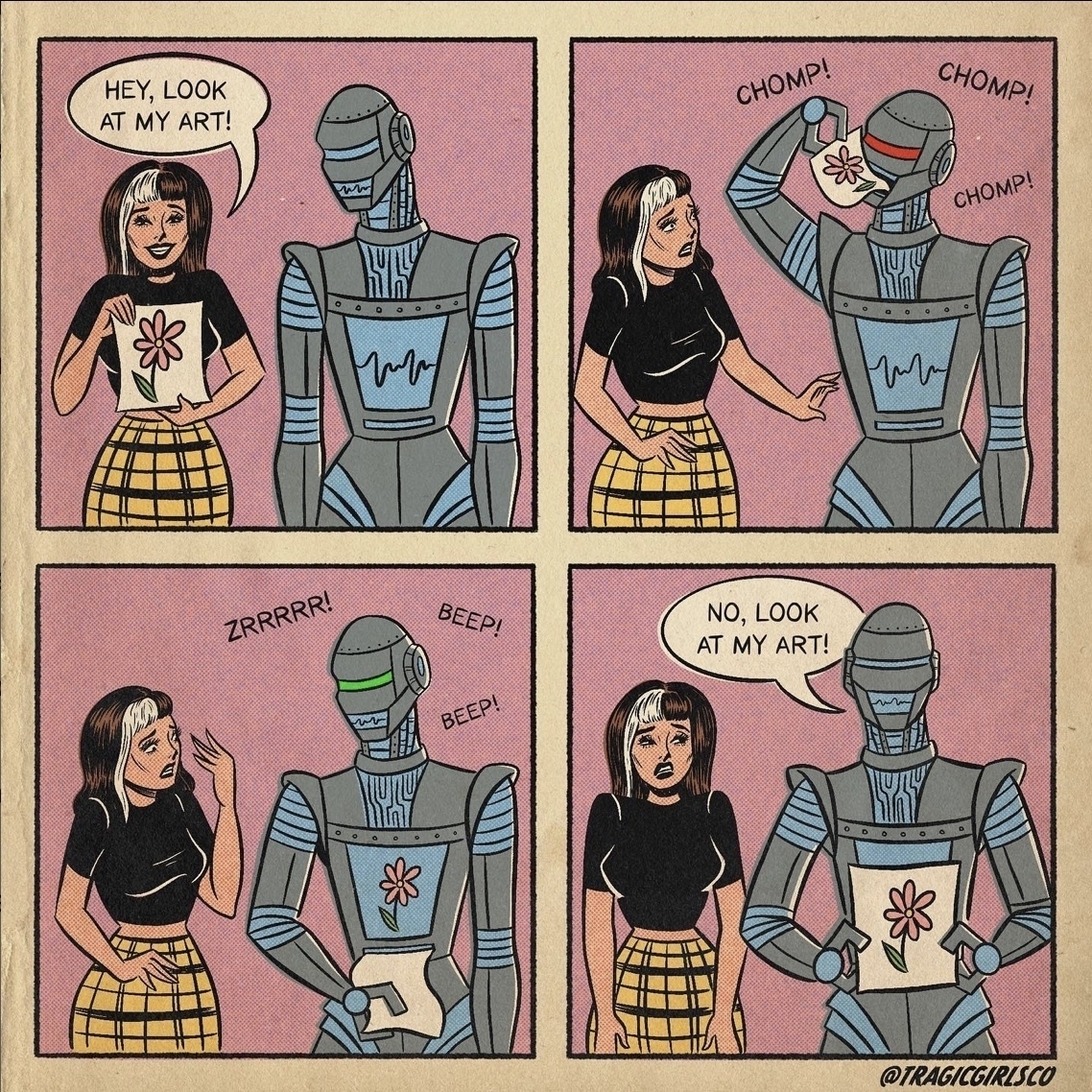

Source: Katie Mansfield / @ tragicgirlsco.

I’m a sucker for stuff like this.

Currently reading: Breaking Bread with the Dead by Alan Jacobs 📚

Currently reading: Cloud Cuckoo Land by Anthony Doerr 📚

These are my notes to myself for adjusting the Z Offset for the BLTouch for my Ender 3V2 Printer.

I’m running OctoPrint and Klipper Firmware.

G28G90 — Switch to absolute position mode.G1 Z3 — Move down to z +3mm.G1 Z2 etc.) until the get to the depth where the paper test passes.current_bltouch_z_offset - current_z_position — So if our absolute Z position goes below zero, we want to add that difference to the existing offset.printer.cfg and save it.restartZ0 is at the right position.BED_MESH_CALIBRATE again.Speaking of eBooks, if you aren’t familiar with Standard Ebooks, check it out! Free, high quality eBooks (in all formats) of classic out-of-copyright texts. Not a bad place for an end of year donation, either.

I feel so torn about eBooks sometimes. I absolutely love my Kindle — it’s one of my favorite things. I love how small and portable it is, and I love having so many books always available, and I love how easy it is to find and get new books.

On the other hand I love physical books, especially used books. And I love being able to look at a real bookshelf and consider what I might want to read.

I want more analog experiences in my life, but alas, I just can’t get away from my Kindle. Though, the Kindle might be the most analog-esque digital device I own. Interesting.

Finally got my old bookshelf speakers out of a box in the garage and set them up in my bedroom. I should have done this a long time ago!

I got the first generation of AirPods Pro shortly after they came out, and I’ve loved them. They’re super small and portable, and while the noise cancelling isn’t quite up to the level of my Bose QC 35s, they are so light and easy to transport that I often grab them instead.

Unfortunately, my pair have been subject to the crackle of doom for a while now, and over the last few weeks it’s gotten noticibly worse.

It being Christmas time, I opted to get myself a little gift, and bought the second generation AirPods Pro — and they’re really great!

The noise cancellation in these is so good it feels like magic. The first generation ANC was great, especially for something so small. But it’s blown away by the new AirPods. I can have my music paused in the gym and it feels nearly silent. They’re amazing.

The new volume gesture on the “stick” is almost worth the upgrade on its own. I was always annoyed to have to reach for my phone to adjust the volume, but I didn’t realize just how often I was doing that until I had an alternative. The gesture feels natural, it works every time, and it really makes the experience of wearing the AirPods feel better.

The next big test will be wearing them during a flight. Typcially, I travel with my bulky QC 35s beacuse the quieting is so good, but with these new AirPods I think I can get away with leaving them at home.

All in all, this has been a really great upgrade! Highly recommended.

My question about all this is: And then? You rush through the writing, the researching, the watching, the listening, you’re done with it, you get it behind you — and what is in front of you? Well, death, for one thing. For the main thing.

If tools like GPT result in the creation of large amounts of new content, and then that content gets ingested by the next generation of the models, at what point does that impact the quality of the new output?

Is all future output bounded by the quality of whatever was available broadly circa late 2021?

Imagine the attention these ML systems will attract once it becomes public that big companies/governments/individuals use them for any given task. Suddenly, there will be immense incentive to poison the datasets in subtle but impactful ways. Then we’ll see attacks that are both very direct (compromise the humans overseeing the “safety” of the system) as well as very indirect (massive content farms taking advantage of known weaknesses/limitations to poison the data pool(s)).

Imagine when someone hack’s one of these organization’s systems, then subtly alters the precedence used when ingesting information!

And then there’s figuring out how to inject the right prompts to leverage RCE vulnerabilities!

And the more “capable” they become, the bigger the attack surface!

From this thought-provoking article by Jessica Martin (via Alan Jacobs):

in our world dominated by online representation, we feel our physical expressions to be ephemeral, powerless, invisible. If we are not on the internet, we think we are not really present at all.

And – however our merciful God might redeem our terrible choices – there’s something very, very wrong about that.

(Emphasis mine)

I’ve been chewing on what feels like a bunch of related ideas around the central theme of Being Human. This quote is one of them, and it feels relevant today as I’m thinking about a computer program micking human beings well enough to freak a bunch of people out.

Played around with ChatGPT a bit this weekend, which is currently at the peak of some kind of hype cycle.

My first reaction is that I really don’t like it, though a lot of it is impressive. I’ve been trying to figure out why my feelings are so negative. I haven’t been able to get to the bottom of it, but I thought I’d jot down some notes; maybe this will coalesce in the future, or just serve as an embarassing misjudgment. Time will tell.

How to use a Github Personal Access token for cloning a repo:

$ git clone https://ghp_YOURTOKENHERE:x-oauth-basic@github.com/Organization/My-Repo.git

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 85mm F1.8

Focal Length: 85.0 mm

Aperture: f/11.0

Shutter Speed: 1/30

ISO: 100

Exposure Compensation: -2

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 35mm F1.8

Focal Length: 35.0 mm

Aperture: f/13.0

Shutter Speed: 1/1000

ISO: 100

Exposure Compensation: -2

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 35mm F1.8

Focal Length: 35.0 mm

Aperture: f/13.0

Shutter Speed: 1/1000

ISO: 100

Exposure Compensation: -2

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 35mm F1.8

Focal Length: 35.0 mm

Aperture: f/13.0

Shutter Speed: 1/200

ISO: 100

Exposure Compensation: -2

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 35mm F1.8

Focal Length: 35.0 mm

Aperture: f/1.8

Shutter Speed: 1/2500

ISO: 100

Exposure Compensation: -2

Flagstaff Arizona October 17, 2022

Camera: SONY ILCE-7RM4A

Lens: FE 35mm F1.8

Focal Length: 35.0 mm

Aperture: f/13.0

Shutter Speed: 1/640

ISO: 100

Exposure Compensation: -2